Since unit testing and test-driven development burst onto the programming scene in the early 2000s, too many programmers have deluded themselves into thinking that they could ship high-quality software with automated testing alone. It’s a mirage.

Don’t get me wrong. The industry took a big leap forward when the tooling and conventions for automated testing got put in the spotlight. But in many corners, it also threw the baby out with the bathwater. Automated testing does not replace “testing by hand”, it augments it.

Testing by hand, or exploratory testing, is a crucial technique for ferreting out issues off the happy path. It is best carried out by dedicated testers who did not work on the implementation. Those pesky auditors who have the nerve to try using the application in all the ways a real user might.

None of this is news, of course. I remember reading a statistic long ago saying that Microsoft had three testers for every developer. That sounds wild to me, but I suppose if you’re trying to keep three decades of backwards compatibility going, maybe you do need that sort of firepower.

What it is, though, is forgotten wisdom. Especially in the small and mid-sized shops. Propelled by the idea that automation could take care of the testing, dedicated testers weren’t even on the menu in many establishments.

For many years that included us at Basecamp. Yeah, sure, programmers and designers would sorta click through a feature and make sure it sorta worked. Then we’d ship it and see what users found.

But we leveled up in a big way when Michael Berger became the first dedicated tester at Basecamp several years ago. He’s since been joined by Ann Goliak. And between the two of them, we’ve never shipped higher quality software. Far more issues are caught in the dedicated QA rounds that precede all major releases.

What Ann and Michael bring to the table just cannot be replicated by programmers writing automated tests. We still write lots of those, and they serve as a very useful guide during development, and form a strong regression suite. But they’re woefully insufficient. And it doesn’t matter whether they’re unit, functional, model, or even system tests. They’re no substitute for a fresh pair of human eyes bent on breakage.

I hope we start seeing a renaissance for human testers at some point. Not just as something to do if there’s time, but something for dedicated individuals to do because it’s effective. Long live manual testing!

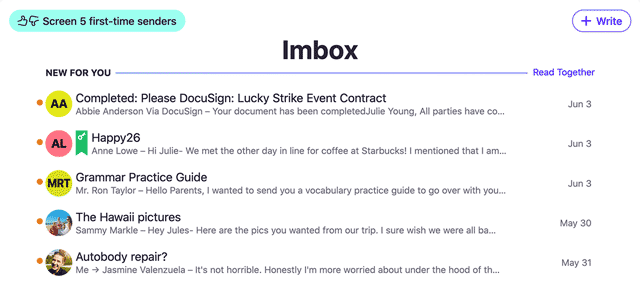

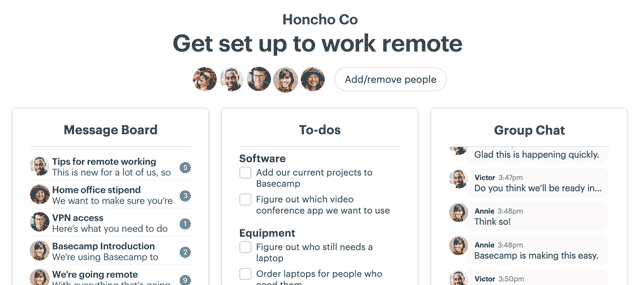

Ann and Michael just finished testing a major upgrade to the todos feature in Basecamp 3. You should give it a try.