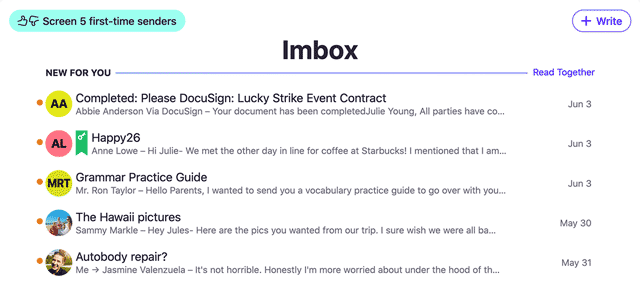

Basecamp’s newest product HEY has lived on Kubernetes since development first began. While our applications are majestic monoliths, a product like HEY has numerous supporting services that run along-side the main app like our mail pipeline (Postfix and friends), Resque (and Resque Scheduler), and nginx, making Kubernetes a great orchestration option for us.

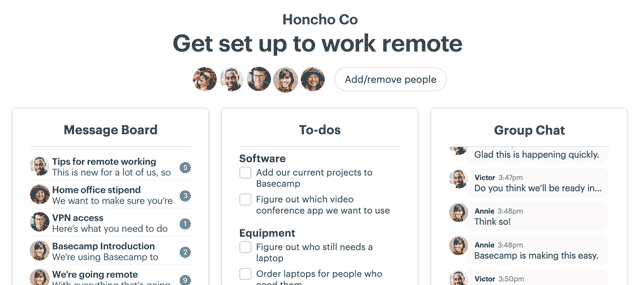

As you work on code changes or new feature additions for an application, you naturally want to test them somewhere — either in a unique environment or in production via feature flags. For our other applications like Basecamp 3, we make this happen via a series of numbered environments called betas (beta1 through betaX). A beta environment is essentially a mini production environment — it uses the production database but everything else (app services, Resque, Redis) is separate. In Basecamp 3’s case, we have a claim system via an internal chatbot that shows the status of each beta environment (here, none of them are claimed):

Our existing beta setup is fine, but what if we can do something better with the new capabilities that we are afforded by relying on Kubernetes? Indeed we can! After reading about GitHub’s branch-lab setup, I was inspired to come up with a better solution for beta environments than our existing claims system. The result is what’s in-use today for HEY: a system that (almost) immediately deploys any branch to a branch-specific endpoint that you can access right away to test your changes without having to use the claims system or talk to anyone else (along with an independent job processing fleet and Redis instance to support the environment).

Let’s walk through the developer workflow

- A dev is working on a feature addition to the app, aptly named new-feature.

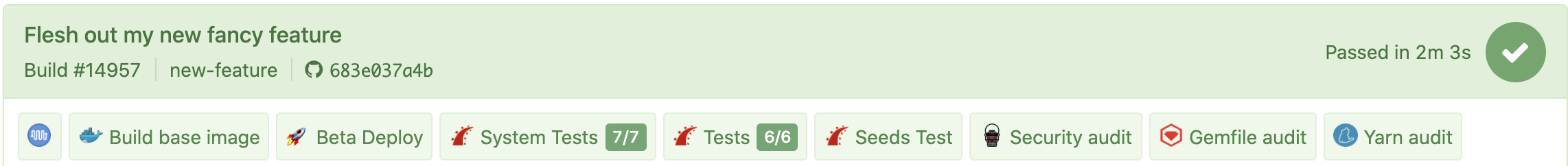

- They make their changes in a branch (called new-feature) and push them to GitHub which automatically triggers a CI run in Buildkite:

- The first step in the CI pipeline builds the base Docker image for the app (all later steps depend on it). If the dev hasn’t made a change to Gemfile/Gemfile.lock, this step takes ~8 seconds. Once that’s complete, it’s off to the races for the remaining steps, but most importantly for this blog post: Beta Deploy.

- The “Beta Deploy” step runs

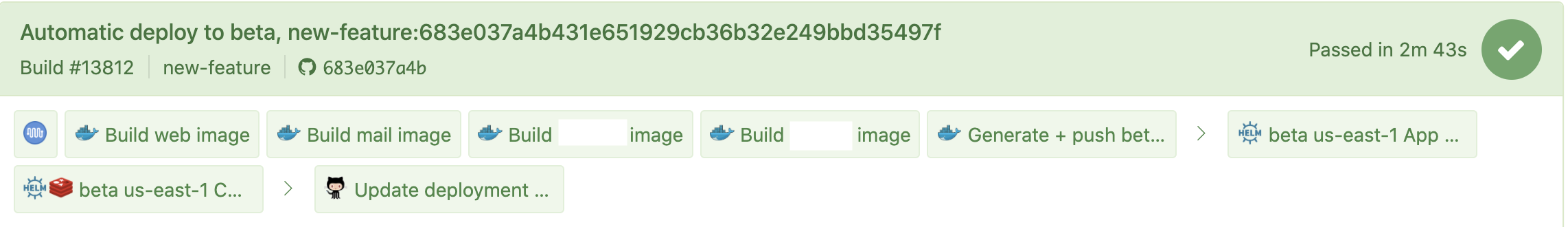

bin/deploywithin the built base image, creating a POST to GitHub’s Deployments API. In the repository settings for our app, we’ve configured a webhook that responds solely to deployment events — it’s connected to a separate Buildkite pipeline. When GitHub receives a new deployment request, it sends a webhook over to Buildkite causing another build to be queued that handles the actual deploy (known as the deploy build). - The “deploy build” is responsible for building the remainder of the images needed to run the app (nginx, etc.) and actually carrying out the Helm upgrades to both the main app chart and the accompanying Redis chart (that supports Resque and other Redis needs of the branch deploy):

- From there, Kubernetes starts creating the deployments, statefulsets, services, and ingresses needed for the branch, a minute or two later the developer can access their beta at https://new-feature.corp.com. (If this isn’t the first time a branch is being deployed, there’s no initializing step and the deployment just changes the images running in the deployment).

What if a developer wants to manage the deploy from their local machine instead of having to check Buildkite? No problem, the same bin/deploy script that’s used in CI works just fine locally:

$ bin/deploy beta

[✔] Queueing deploy

[✔] Waiting for the deploy build to complete : https://buildkite.com/new-company/great-new-app-deploys/builds/13819

[✔] Kubernetes deploy complete, waiting for Pumas to restart

Deploy success! App URL: https://new-feature.corp.com(bin/deploy also takes care of verifying that the base image has already been built for the commit being deployed. If it hasn’t it’ll wait for the initial CI build to make it past that step before continuing on to queueing the deploy.)

Remove the blanket!

Sweet, so the developer workflow is easy enough, but there’s got to be more going on below the covers, right? Yes, a lot. But first, story time.

HEY runs on Amazon EKS — AWS’ managed Kubernetes product. While we wanted to use Kubernetes, we don’t have enough bandwidth on the operations team to deal with running a bare-metal Kubernetes setup currently (or relying on something like Kops on AWS), so we’re more than happy to pay AWS a few dollars per month to handle managing our cluster masters for us.

While EKS is a managed service and relatively integrated with AWS, you still need a few other pieces installed to do things like create Application Load Balancers (what we use for the front-end of HEY) and touch Route53. For those two pieces, we have a reliance on the aws-alb-ingress-controller and external-dns projects.

Inside the app Helm chart we have two Ingress resources (one external, and one internal for cross-region traffic that stays within the AWS network) that have all of the right annotations to tell alb-ingress-controller to spin up an ALB with the proper settings (health-checks so that instances are marked healthy/unhealthy, HTTP→HTTPS redirection at the load balancer level, and the proper SSL certificate from AWS Certificate Manager) and also to let external-dns know that we need some DNS records created for this new ALB. Those annotations look something like this:

Annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/listen-ports: [{"HTTP": 80},{"HTTPS": 443}]

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/ssl-policy: ELBSecurityPolicy-TLS-1-2-2017-01

alb.ingress.kubernetes.io/actions.ssl-redirect: {"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:us-east-1:############:certificate/########-####-####-####-############

external-dns.alpha.kubernetes.io/hostname: new-feature.us-east-1.corp.com.,new-feature.corp.com.alb-ingress-controller and external-dns are both Kubernetes controllers and constantly watch cluster resources for annotations that they know how to handle. In this case, external-dns will know that it shouldn’t create a record for this Ingress resource until it has been issued an Address, which alb-ingress-controller will take care of in it’s own control loop. Once an ALB has been provisioned, alb-ingress-controller will tell the Kubernetes API that this Ingress has X Address and external-dns will carry on creating the appropriate records in the appropriate Route53 zones (in this case, external-dns will create an ALIAS record pointing to Ingress.Address and a TXT ownership record within the same Route53 zone (in the same AWS account as our EKS cluster that has been delegated from the main app domain just for these branch deploys).

These things cost money, right, what about the clean-up!?

Totally, and at the velocity that our developers are working on this app, it can rack up a small bill in EC2 spot instance and ALB costs if we have 20-30 of these branches deployed at once running all the time! We have two methods of cleaning up branch-deploys:

- a GitHub Actions-triggered clean-up run

- a daily clean-up run

Both of these run the same code each time, but they’re targeting different things. The GitHub Actions-triggred run is going after deploys for branches that have just been deleted — it is triggered whenever a delete event occurs in the repository. The daily clean-up run is going after deploys that are more than five days old (we do this by comparing the current time with the last deployed time from Helm). We’ve experimented with different lifespans on branch deploys, but five works for us — three is too short, seven is too long, it’s a balance.

When a branch is found and marked for deletion, the clean-up build runs the appropriate helm delete commands against the main app release and the associated Redis release, causing a cascading effect of Kubernetes resources to be cleaned up and deleted, the ALBs to be de-provisioned, and external-dns to remove the records it created (we run external-dns in full-sync mode so that it can delete records that it owns).

Other bits

- We’ve also run this setup using Jetstack’s cert-manager for issuing certs with Let’s Encrypt for each branch deploy, but dropped it in favor of wildcard certs managed in AWS Certificate Manager because hell hath no fury like me opening my inbox everyday to find 15 cert expiration emails in it. It also added several extra minutes to the deploy provisioning timeline for new branches — rather than just having to wait for the ALB to be provisioned and the new DNS records to propagate, you also had to wait for the certificate verification record to be created, propagate, Let’s Encrypt to issue your cert, etc etc etc.

- DNS propagation can take a while, even if you remove the costly certificate issuance step. This was particularly noticeable if you used

bin/deploylocally because the last step of the script is to hit the endpoint for your deploy over and over again until it’s healthy. This meant that you could end up caching an empty DNS result since external-dns may not have created the record yet (likely, in-fact, for new branches). We help this by setting a low negative caching TTL on the Route53 zone that we use for these deploys. - There’s a hard limit on the number of security groups that you can attach to an ENI and there’s only so much tweaking you can do with AWS support to maximize the number of ALBs that you can have attached to the nodes in an EKS cluster. For us this means limiting the number of branch deploys in a cluster to 30. HOWEVER, I have a stretch goal to fix this by writing a custom controller that will play off of alb-ingress-controller and create host-based routing rules on a single ALB that can serve all beta instances. This would increase the number of deploys per cluster up to 95ish (per ALB since an ALB has a limit on the number of rules attached), and reduce the cost of the entire setup significantly because each ALB costs a minimum of $16/month and each deploy has two ALBs (one external and one internal).

- We re-use the same Helm chart for production, beta, and staging — the only changes are the database endpoints (between production/beta and staging), some resource requests, and a few environmental variables. Each branch deploy is its own Helm release.

- We use this setup to run a full mail pipeline for each branch deploy, too. This makes it easy for devs to test their changes if they involve mail processing, allowing them to send mail to

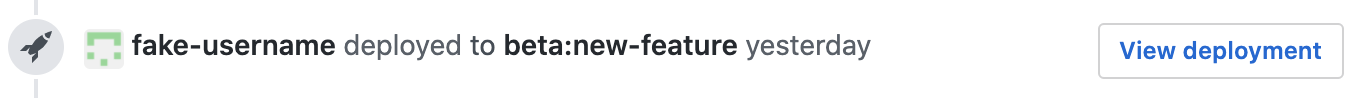

<their username>@new-feature.corp.comand have it appear in their account as if they sent it through the production mail pipeline. - Relying on GitHub’s Deployments API means that we get nice touches in PRs like this:

If you’re interested in HEY, checkout hey.com and learn about our take on email.

Blake is Senior System Administrator on Basecamp’s Operations team who spends most of his time working with Kubernetes, and AWS, in some capacity. When he’s not deep in YAML, he’s out mountain biking. If you have questions, send them over on Twitter – @t3rabytes.

Nice write-up. Did you investigate Google Kubernetes Engine (GKE)? It’s a much better, more mature, managed Kubernetes offering than EKS. Yes, I have used both.

Yes, HEY initially started out on GKE before being moved to EKS — we migrated a bunch of stuff from GCP to AWS for some other reasons. I liked GKE a lot though, very hands-off and feature forward (we still have a cluster running there today).

This is some fantastic work. Thank you for sharing the details!

Thanks, Michael!

Wow, this is great inspiration! We have been thinking about this for a couple of months but didn’t get to it yet. (Small team) One question, how do you handle testing unsafe methods if you use the production database? Spin up a snapshot of production, rollback,…

We still have a real staging environment for doing dangerous stuff first — migrations, etc. A dev can restore it with a production snapshot via another chatbot command, takes 30-40 minutes to complete and they get notified via chat when it’s ready to go (I wrote that system soon after joining Basecamp and I dread having to touch it to this day 😅).

Great write-up! Two questions:

1) If you run beta features against the production database, how do you handle migrations?

2) What does a typical local development environment look like? Docker-compose? Minikube?

We’ve got some unwritten internal rules that if your change needs to migrate the DB and does anything aside from column or table additions, it needs to be tested in staging instead. DHH touched on it in https://twitter.com/dhh/status/1252364787616342016.

Oh, local-dev: docker-compose for services like MySQL, Redis, and Elasticsearch, and then raw Puma via `rails s`. We’ve experimented with entire Docker-based dev setups, but they’re bulky and resource intensive compared to just containerizing the services and letting you develop the app using the tools you’re comfortable with and a standard method (`rails s` in this case).

Nice write up. For BC3 how do you handle database migrations if both betaX servers and production are using the same database? Do you migrate the database whenever you deploy to a beta server?

Same way for HEY — only migrations that are additive, whether that’s a column or a table, are allowed to ran from beta. Anything else needs to go through staging first for testing prior to merging to master and migrating production.

If you *do* need to migrate, I’ve got an addition that I just wrapped up to `bin/deploy` that allows a `-m` option that’ll migrate before the rest of the deploy runs.

Nice work 👏🏻 Smart use of Github deployments.

Thanks, Anton! 🤘

We use something very similar, but instead of 1 ALB per deployment, we use a single nginx ingress controller for all deployments. We have 100s of deployments in our dev cluster, from branches from many, many different apps. It works very well!

Good to hear! ingress-nginx would definitely be a nice addition to this setup, something to consider in the future when we have more bandwidth to tackle it.

Thanks Blake, awesome post.

We use similar approach also, only difference is that we run on Digital Ocean. They are also pretty good with awesome documentation and lower prices than AWS or GKE.

For those who don’t want to hassle with K8, Heroku offers basically the same thing with their Heroku Review Apps 🙂

Totally! I like Digital Ocean a lot, their Kubernetes offering just wasn’t as fleshed out as we would’ve liked when we evaluated provider options (and we had some other networking things that DO wasn’t able to help us with while the big providers could).

Are you also running your Buildkite CI infrastructure in Kubernetes? Curious if you are using HPA or something similar to adjust to your build queue size.

Yes, we run all of our agents in an EKS cluster — image build agents and all. We’re using a static number of agents, but I have a side project using Fargate that is an option for some build steps that we use: https://github.com/blakestoddard/scaledkite (it’s troublesome for some of our Docker-based steps because you can’t run DinD with Fargate).

Great post!

For a total K8S beginner, what resources do you recommend for growing to this level of knowledge?

The docs on Kubernetes.io are decent IMO, and the GKE docs if you’re looking for docs on running actual clusters: https://cloud.google.com/kubernetes-engine/docs/concepts

Maybe this Auth0 post, too: https://auth0.com/blog/kubernetes-tutorial-step-by-step-introduction-to-basic-concepts/

I’m curious why you use Resque and not Sidekiq which is almost plug-n-play replacement. Resque memory consumption is just insane.

+1 🙂

DHH answered in https://twitter.com/dhh/status/1252329322582388736, not a high priority project to consider migration either.

Given your beta environments use production data, do you have any extra secure measures in place to protect customer information?

I can imagine a scenario where a dev is testing code and accidentally adds a vulnerability or debugging output that exposes production data. Or maybe a destructive action that nukes customer data by mistake – maybe a deletion is supposed to target one row and instead removes many rows.

This is insane.

I’m not trolling; I don’t have a magical better solution to try to sell you; I can’t even tell you what exactly made my brain go kind of sideways here.

I understand all of the things you’re accomplishing with this incredible spaghetti bowl of tools and knowledge domains. But I can’t help but think that somewhere along the way, we’ve lost the plot.

In the same way that DHH extolls the virtues of the magical monolith as opposed to magical microservices, I think deployment and hosting of we’d-like-them-to-survie-at-scale applications is bonkers today.

To implement the scheme you’ve outlined, you need to have near-mastery-level understanding of:

* Kubernetes

* Buildkite pipelines

* Github’s Deployment API

* Helm

* Redis

* Nginx

* Amazon EKS

* Kops on AWS (in order to know that it’s an inferior option)

* Application Load Balancers and Annotations

* Route53

* aws-alb-ingress-controller

* external-dns

* AWS certificate manager

* AWS cost estimating over diverse products like EC2, ALB, etc.

* Scheduling “clean-up runs” somehow

* Jetstack and cert-manager

* ENI (what the hell is ENI?)

* Fargate, apparently

Again, I think this is in many ways awesome and it’s obvious that Blake is an effing rock-star.

But I also think that this is crazy.

All of the things above are high-level abstractions on top of the layer of hundreds of Linux components that one needs to master to even start down this rabbit hole.

Maybe it’s just that right now, in this moment, we’re stuck inside the crucible where the solutions of tomorrow are being forged.

But it feels, for all the world, like we’re making things complicated in the name of making them simple.

You had me in the first half, I’m not going to lie. But the farther I read your comment, the more I agreed with it. We *have* reached abstraction overload, and we’ve even reached the point where folks are creating abstractions for the abstractor (Kubernetes). What’s the fix? I don’t know. Kubernetes and cloud-as-a-whole are both things created to solve a small subset of problems and then got gradual (or fast) feature additions to cover X, Y, and Z use-cases, causing bloat, etc — it’s just the natural progression of most software projects. I’ll be the last person to tell you that any of these things are simple or that it is easy to become proficient in all or even a select few. Is there a value add from them? In my mind, yes, or there *can* be, but it’s all in the application of them — can you piece them together to solve some problem you’re seeing? Probably. But is it worth it? I don’t know.

Honestly I should write a whole other blog post about your comment, there’s so much I agree with and want to unpack.

Do write that post, Blake. The world needs it!

I’m incredibly happy to discover that I didn’t come off as a jerk, and that some of what I’m getting at rang true.

Looking forward to follow-ups 😉

dc

Hello

Great article. How do you manage ENV settings for the application, especially secrets? Do you keep everything encrypted in the code repository or do you use the Kubernetes ENV and secret storage?

Also: We run our clusters with a single NLB in front of a Traefik ingress controller. The advantage is that you don’t need an additional ALB for each deployment and spin-up times for new deployments are also shorter because you don’t have to wait for the ALB creation.

Hey Blake thanks for the great write up, I imagine it took some time to set up and refine the system but faster and more automated feedback is definitely a massive productivity win!

Was there a specific reason you had to use a subdomain for each branch though?

We’ve actually built a similar (though manually deployed only) system and by using nginx-ingress with a simple rewrite-target rule we were able to deploy all the branches under a preview.site.com/new-feature path, which saved a lot of the DNS/SSL certificate/load balancer provisioning hassle.